Template:Statusboxtop Template:Status-design Template:Status-model Template:Status-prototype Template:Status-verified You can help Appropedia by contributing to the next step in this OSAT's status. Template:Boxbottom

Source

- Aliaksei L. Petsiuk and Joshua M. Pearce. Open Source Computer Vision-based Layer-wise 3D Printing Analysis. Additive Manufacturing. (36) (2020), 101473, https://doi.org/10.1016/j.addma.2020.101473. https://arxiv.org/abs/2003.05660 open access

- Free and open source code: https://osf.io/8ubgn/

Highlights

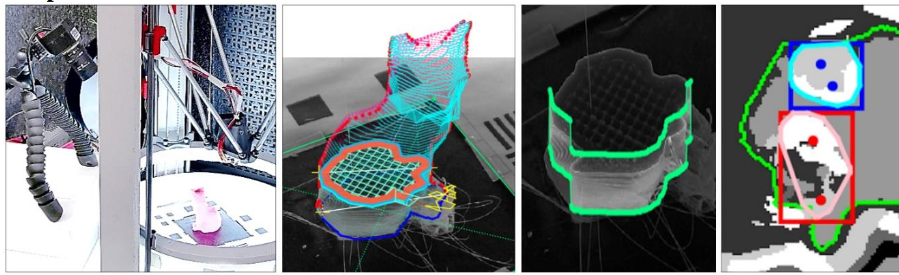

- Developed a visual servoing platform using a monocular multistage image segmentation.

- Presented algorithm prevents critical failures during additive manufacturing.

- The developed system allows tracking printing errors on the interior and exterior.

Abstract

The paper describes an open source computer vision-based hardware structure and software algorithm, which analyzes layer-wise the 3-D printing processes, tracks printing errors, and generates appropriate printer actions to improve reliability. This approach is built upon multiple-stage monocular image examination, which allows monitoring both the external shape of the printed object and internal structure of its layers. Starting with the side-view height validation, the developed program analyzes the virtual top view for outer shell contour correspondence using the multi-template matching and iterative closest point algorithms, as well as inner layer texture quality clustering the spatial-frequency filter responses with Gaussian mixture models and segmenting structural anomalies with the agglomerative hierarchical clustering algorithm. This allows evaluation of both global and local parameters of the printing modes. The experimentally-verified analysis time per layer is less than one minute, which can be considered a quasi-real-time process for large prints. The systems can work as an intelligent printing suspension tool designed to save time and material. However, the results show the algorithm provides a means to systematize in situ printing data as a first step in a fully open source failure correction algorithm for additive manufacturing.

Keywords

3-D printing, additive manufacturing; open-source hardware; RepRap; computer vision; quality assurance; real-time monitoring

See also

- Integrated Voltage—Current Monitoring and Control of Gas Metal Arc Weld Magnetic Ball-Jointed Open Source 3-D Printer

- Low-cost Open-Source Voltage and Current Monitor for Gas Metal Arc Weld 3-D Printing

- Slicer and process improvements for open-source GMAW-based metal 3-D printing

- Open-source Lab

- Open source 3-D printing of OSAT