Conferences[edit | edit source]

The British Machine Vision Conference (BMVC) https://britishmachinevisionassociation.github.io/bmvc

https://britishmachinevisionassociation.github.io/ - the paper submission deadline in June.

ICCV 2022: 16. International Conference on Computer Vision (September 22-23, 2022 in Vancouver, Canada) https:// waset.org/computer-vision-conference-in-september-2022-in-vancouver - Abstracts/Full-Text Paper Submission Deadline March 01, 2022

The 16th Asian Conference on Computer Vision (ACCV2022) (Macau SAR, China on Dec 4-8, 2022) https://accv2022.org/en/IMPORTANT-DATES.html - Regular Paper Submission Deadline 6 July 2022

THE AAAI CONFERENCE ON ARTIFICIAL INTELLIGENCE (Vancouver, Canada, from February 22–March 1, 2022) https://www.aaai.org/Conferences/AAAI/aaai.php

Machine Learning in Additive Manufacturing[edit | edit source]

Jian Qin, Fu Hu et al. Research and application of machine learning for additive manufacturing. Additive Manufacturing, 52, 4 2022. https://doi.org/10.1016/j.addma.2022.102691

Xinbo Qi, Guofeng Chen et al. Applying Neural-Network-Based Machine Learning to Additive Manufacturing: Current Applications, Challenges, and Future Perspectives. Engineering, 5, 4, 8 2019. https://doi.org/10.1016/j.eng.2019.04.012

Razvi, SS, Feng, S, Narayanan, A, Lee, YT, & Witherell, P. A Review of Machine Learning Applications in Additive Manufacturing. Proceedings of the ASME 2019 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference. Volume 1: 39th Computers and Information in Engineering Conference. Anaheim, California, USA. August 18–21, 2019. V001T02A040. ASME. https://doi.org/10.1115/DETC2019-98415

Jiang, J., Xiong, Y., Zhang, Z. et al. Machine learning integrated design for additive manufacturing. J Intell Manuf (2020). https://doi.org/10.1007/s10845-020-01715-6

Zeqing Jin, Zhizhou Zhang et al. Machine Learning for Advanced Additive Manufacturing. Matter, 3, 5, 11 2020. https://doi.org/10.1016/j.matt.2020.08.023

Meng, L., McWilliams, B., Jarosinski, W. et al. Machine Learning in Additive Manufacturing: A Review. JOM 72, 2363–2377 (2020). https://doi.org/10.1007/s11837-020-04155-y

C. Wang, X. P. Tan et al. Machine learning in additive manufacturing: State-of-the-art and perspectives. Additive Manufacturing, 36, 12 2020. https://doi.org/10.1016/j.addma.2020.101538

Gibson I., Rosen D., Stucker B., Khorasani M. (2021) Software for Additive Manufacturing. In: Additive Manufacturing Technologies. Springer, Cham. https://doi.org/10.1007/978-3-030-56127-7_17

2022[edit | edit source]

Development of automated feature extraction and convolutional neural network optimization for real-time warping monitoring in 3D printing[edit | edit source]

Jiarui Xie, Aditya Saluja, Amirmohammad Rahimizadeh & Kazem Fayazbakhsh, "Development of automated feature extraction and convolutional neural network optimization for real-time warping monitoring in 3D printing", International Journal of Computer Integrated Manufacturing 2022. https://doi.org/10.1080/0951192X.2022.2025621

- The feature extraction is based on G-code analysis, and map matching between the build platform and the captured image. This allows the warping detection algorithm to be applied to different camera angles, part locations, and corner geometries.

- Bayesian optimization is adopted to determine the best hyperparameters for the classification model.

- The CNN model is executed in a Raspberry Pi pre-configured with OctoPrint, with plugins coordinating and controlling the camera, 3D printer, and microcomputer.

- Based on 16 tests carried out, the warping monitoring system was determined to be 99.2% accurate.

- Prusa i3 MK2S FFF 3D printer | Raspberry Pi3 with OctoPrint | Sony A5100 camera

- Fifty-two 30×15×5 mm cuboids were printed individually and recorded layer-by-layer to collect images of unwarped corners.

- The dataset proposed for this study consisted of 550 color 6000×4000 px images equally divided between two classes, warped and unwarped.

2021[edit | edit source]

Monitoring Anomalies in 3D Bioprinting with Deep Neural Networks[edit | edit source]

Zeqing Jin, Zhizhou Zhang, Xianlin Shao, and Grace X. Gu, "Monitoring Anomalies in 3D Bioprinting with Deep Neural Networks", ACS Biomater. Sci. Eng. 2021. https://doi.org/10.1021/acsbiomaterials.0c01761

- An anomaly detection system based on layer-by-layer sensor images and machine learning algorithms is developed to distinguish and classify imperfections for transparent hydrogel-based bioprinted materials.

- High anomaly detection accuracy is obtained by utilizing convolutional neural network methods as well as advanced image processing and augmentation techniques on extracted small image patches.

- Along with the prediction of various anomalies, the category of infill pattern and location information on the image patches can be accurately determined.

Precise localization and semantic segmentation detection of printing conditions in fused filament fabrication technologies using machine learning[edit | edit source]

Jin, Zeqing, Zhizhou Zhang, Joshua David Ott and Grace X. Gu. "Precise localization and semantic segmentation detection of printing conditions in fused filament fabrication technologies using machine learning", Additive manufacturing 37 (2021): 101696. https://doi.org/10.1016/j.addma.2020.101696

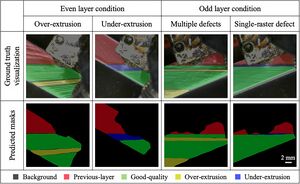

- Localization model: YOLOv3 machine learning model performs feature extraction using architecture Darknet-53.

- Semantic segmentation: DeepLabv3 architecture, convolution with different sampling rates proposed to re-scale the vision field and maintain the resolution.

- 'Previous-layer', 'Over-extrusion', and 'Under-extrusion' are used to stand for the three categories.

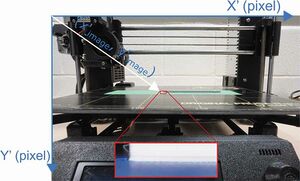

- PRUSA i3 MK3 FFF (PLA) 3D-printer + USB camera 448x448 px

- 1400 images (rectangular sheets 100x25x0.6 mm, 3 layers) containing quality transitions are selected as the entire image data set.

- Images are labeled with an open-source python software (labelme, https://github.com/wkentaro/labelme)

Artificial Neural Network Algorithms for 3D Printing[edit | edit source]

Mahmood, Muhammad A., Anita I. Visan, Carmen Ristoscu, and Ion N. Mihailescu. "Artificial Neural Network Algorithms for 3D Printing", MDPI Materials 2021, 14(1), 163. https://doi.org/10.3390/ma14010163

- The study compiles the advancement of artificial neural networks in several aspects of 3D printing.

Modeling the Producibility of 3D Printing in Polylactic Acid Using Artificial Neural Networks and Fused Filament Fabrication[edit | edit source]

Meiabadi, Mohammad S., Mahmoud Moradi, Mojtaba Karamimoghadam, Sina Ardabili, Mahdi Bodaghi, Manouchehr Shokri, and Amir H. Mosavi. "Modeling the Producibility of 3D Printing in Polylactic Acid Using Artificial Neural Networks and Fused Filament Fabrication", MDPI Polymers 2021, 13(19), 3219. https://doi.org/10.3390/polym13193219

- The artificial neural network (ANN) and ANN-genetic algorithm (ANN-GA) were developed to estimate the toughness, part thickness, and production-cost-dependent variables.

- Results were evaluated by correlation coefficient and RMSE values. According to the modeling results, ANN-GA as a hybrid machine learning technique could enhance the accuracy of modeling by about 7.5, 11.5, and 4.5% for toughness, part thickness, and production cost, respectively, in comparison with those for the single ANN method.

Traditional Artificial Neural Networks Versus Deep Learning in Optimization of Material Aspects of 3D Printing[edit | edit source]

Rojek, Izabela, Dariusz Mikołajewski, Piotr Kotlarz, Krzysztof Tyburek, Jakub Kopowski, and Ewa Dostatni. "Traditional Artificial Neural Networks Versus Deep Learning in Optimization of Material Aspects of 3D Printing", MDPI Materials 2021, 14(24), 7625. https://doi.org/10.3390/ma14247625

- Comparison of the 3D printing properties optimization toward the maximum tensile force of an exoskeleton sample based on two different approaches: traditional artificial neural networks (ANNs) and a deep learning (DL) approach based on convolutional neural networks (CNNs).

- The results show that DL is an effective tool with significant potential for wide application in the planning and optimization of material properties in the 3D printing process.

2020[edit | edit source]

Real-Time 3D Printing Remote Defect Detection (Stringing) with Computer Vision and Artificial Intelligence[edit | edit source]

Paraskevoudis, Konstantinos, Panagiotis Karayannis, and Elias P. Koumoulos. "Real-Time 3D Printing Remote Defect Detection (Stringing) with Computer Vision and Artificial Intelligence", MDPI Processes 2020 8(11), 1464. https://doi.org/10.3390/pr8111464

- Neural Networks are developed for identifying 3D printing defects during the printing process by analyzing video captured from the process on Prusa i3 MK3S and a Raspberry Pi 4 with connected camera.

- A dataset of 500 images with a stringing phenomenon was collected as a training dataset for the Single Shot Detector (Deep Neural Network). Applying these 5 data augmentation techniques to each of the 500 original training images, we obtained a final dataset of 2500 images.

- The Single Shot Detector running on 300 × 300 input (SSD-300), published in 2016, achieved a mean Average Precision (mAP) of 74.3% on benchmark Dataset VOC-2007 at 59 frames per second (FPS) and a mean Average Precision (mAP) of 41.2% at an Intersection over Union (IoU) of 0.5 on benchmark Dataset of Common Objects in Context (COCO test-dev2015). The achieved mAP outperformed existing (at the time) state-of-the-art models while running at high FPS.

- VGG16 on Tensorflow. The trained model achieved a Precision of 0.44 and a Recall of 0.69 at an IoU of 0.4, a Precision of 0.41 and a Recall of 0.63 at an IoU of 0.5, and a Precision of 0.4 and a Recall of 0.62 at an IoU of 0.6.

A closed-loop in-process warping detection system for fused filament fabrication using convolutional neural networks[edit | edit source]

Aditya Saluja, Jiarui Xie, Kazem Fayazbakhsh. "A closed-loop in-process warping detection system for fused filament fabrication using convolutional neural networks", Journal of Manufacturing Processes 2020 (58), pp. 407-415. https://doi.org/10.1016/j.jmapro.2020.08.036

- The paper utilizes deep-learning algorithms to develop a warping detection system using Convolutional Neural Networks (CNN).

- The system is responsible for capturing the print layer-by-layer and simultaneously extracting the corners of the component. The extracted region-of-interest s then passed through a CNN outputting the probability of a corner being warped.

- The underlying model tested in an experimental set-up yielding a mean accuracy of 99.3%.

- Prusa i3 MK2S 3D printer | Sony A5100 Camera | Raspberry Pi OctoPrint

- Keras | The raw dataset consists of 520 colored, 6000 × 4000-pixel images divided equally into two classes.

- The images for unwarped corners were collected by printing 70×15×5 mm rectangular cuboids.

Toward Enabling a Reliable Quality Monitoring System for Additive Manufacturing Process using Deep Convolutional Neural Networks[edit | edit source]

Yaser Banadaki, Nariman Razaviarab, Hadi Fekrmandi, Safura Sharifi. "Toward Enabling a Reliable Quality Monitoring System for Additive Manufacturing Process using Deep Convolutional Neural Networks", arXiv:2003.08749 [cs.CV] 2020. https://arxiv.org/abs/2003.08749

- The CNN model is trained offline using the images of the internal and surface defects in the layer-by-layer deposition of materials and tested online by studying the performance of detecting and classifying the failure in AM process at different extruder speeds and temperatures.

- The model demonstrates the accuracy of 94% and specificity of 96%, as well as above 75% in three classifier measures of the Fscore, the sensitivity, and precision for classifying the quality of the printing process in five grades in real-time.

- The videos from the AM process are captured by a high-definition CCD camera (Lumens DC125). The training data is collected by filming the build of every layer in the AM process and converting the videos to frames.

Automatic Volumetric Segmentation of Additive Manufacturing Defects with 3D U-Net[edit | edit source]

Vivian Wen Hui Wong, Max Ferguson, Kincho H. Law, Yung-Tsun Tina Lee, Paul Witherell, "Automatic Volumetric Segmentation of Additive Manufacturing Defects with 3D U-Net", AAAI 2020 Spring Symposia, Stanford, CA, USA, Mar 23-25, 2020, arXiv:2101.08993 [eess.IV]. https://arxiv.org/abs/2101.08993

- In this work, we leverage techniques from the medical imaging domain and propose training a 3D U-Net model to automatically segment defects in XCT images of AM samples.

- This work demonstrates for the first time 3D volumetric segmentation in AM.

- We train and test with three variants of the 3D U-Net on an AM dataset, achieving a mean intersection of union (IOU) value of 88.4%.

A review on machine learning in 3D printing: applications, potential, and challenges[edit | edit source]

Goh, G.D., Sing, S.L. & Yeong, W.Y., "A review on machine learning in 3D printing: applications, potential, and challenges", Artif Intell Rev 2020, 54, pp. 63–94. https://doi.org/10.1007/s10462-020-09876-9

- This article presents a review of the research development concerning the use of ML in AM, especially in the areas of design for 3D printing, process optimization, and in situ monitoring for quality control.

2019[edit | edit source]

Autonomous in-situ correction of fused deposition modeling printers using computer vision and deep learning[edit | edit source]

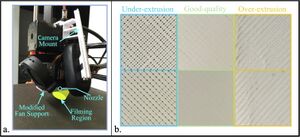

Jin, Zeqing, Zhizhou Zhang, and Grace X. Gu. "Autonomous in-situ correction of fused deposition modeling printers using computer vision and deep learning", Manufacturing Letters 2019 (2), pp. 11-15. https://doi.org/10.1016/j.mfglet.2019.09.005

- CNN classification model is trained using a ResNet 50 architecture

- The system is capable of detecting in-plane printing conditions and in-situ correct defects faster than the speed of a human's response.

- Adjusting commands are automatically executed to change printing parameters via an open-source 3D-printer controlling GUI (https://github.com/kliment/Printrun).

- Embedded continuous feedback detection and monitoring loop where images with new settings will iterate until a good quality condition is achieved.

- PRUSA i3 MK3 FFF (PLA) 3D-printer + Logitech C270 USB Cam.

- Videos are recorded and labeled with the corresponding categories: 'Good-quality', 'Under-extrusion' and 'Over-extrusion'.

- 120,000 images for each category, two levels of condition are generated by printing a five-layer block with size 50×50×1 mm.

Weld image deep learning-based on-line defects detection using convolutional neural networks for Al alloy in robotic arc welding[edit | edit source]

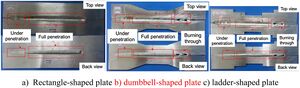

Zhifen Zhang, Guangrui Wen, Shanben Chen. "Weld image deep learning-based on-line defects detection using convolutional neural networks for Al alloy in robotic arc welding", Journal of Manufacturing Processes 2019, 45, pp. 208-216. https://doi.org/10.1016/j.jmapro.2019.06.023

- A new CNN classification model with 11 layers based on weld image was designed to identify weld penetration defects.

- Test results shows that the CNN model has the mean classification accuracy of 99.38%.

- The original size of the weld image is 1392x1040 px and is resized into 100x100 px for the CNN model.

- Two kinds of data augmentation were applied to boost the original dataset including salt and pepper noise addition and 15 to 30 degrees rotation.

- In future, more effective optimization method is needed for multiple parameters of the CNN model. Other advanced method including R-CNN or Fast R-CNN might be considering to selectively search regions of interests of the image.

Automated Real-Time Detection and Prediction of Interlayer Imperfections in Additive Manufacturing Processes Using Artificial Intelligence[edit | edit source]

Zeqing Jin,Zhizhou Zhang,Grace X. Gu. "Automated Real-Time Detection and Prediction of Interlayer Imperfections in Additive Manufacturing Processes Using Artificial Intelligence", Adv. Intell. Syst. 2019, 2, 1900130. https://doi.org/10.1002/aisy.201900130

- A self-monitoring system based on real-time camera images and deep learning algorithms is developed to classify the various extents of delamination in a printed part.

- A novel method incorporating strain measurements is established to measure and predict the onset of warping.

- Results show that the machine-learning model is capable of detecting different levels of delamination conditions, and the strain measurements setup successfully reflects and determines the extent and tendency of warping before it actually occurs in the print job.

Texture Analysis[edit | edit source]

From BoW to CNN: Two Decades of Texture Representation for Texture Classification[edit | edit source]

L. Liu, J. Chen, P. Fieguth, G. Zhao, R. Chellappa, M. Pietikäinen. "From BoW to CNN: Two Decades of Texture Representation for Texture Classification", International Journal of Computer Vision 2019, 127(1), pp 74–109. https://doi.org/10.1007/s11263-018-1125-z

- The paper aims to present a comprehensive survey of advances in texture representation over the last two decades. More than 250 major publications are cited in this survey covering different aspects of the research, including benchmark datasets and state of the art results.

Semantic Segmentation[edit | edit source]

Image Segmentation in 2021: Architectures, Losses, Datasets, and Frameworks[edit | edit source]

Detectron2[edit | edit source]

Detectron2 is Facebook AI Research's next generation library that provides state-of-the-art detection and segmentation algorithms. It is the successor of Detectron and maskrcnn-benchmark. It supports a number of computer vision research projects and production applications in Facebook.

Deep semantic segmentation of natural and medical images: a review[edit | edit source]

Asgari Taghanaki, S., Abhishek, K., Cohen, J.P. et al. Deep semantic segmentation of natural and medical images: a review. Artif Intell Rev 54, 137–178 (2021). https://doi.org/10.1007/s10462-020-09854-1

Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN[edit | edit source]

Yang Yu, Kailiang Zhang, Li Yang, Dongxing Zhang. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN, Computers and Electronics in Agriculture 2019, 163, 104846. https://doi.org/10.1016/j.compag.2019.06.001